GPGPU Offload Phase 1

Overview

As part of an ADAS project using embedded Nvidia GPUs, we are conducting an investigation into the current state of GPGPU support including development, debug, and performance analysis tools starting with Nvidia GPUs. Since large customer proprietary applications can be diffcult to work with, we needed a simpler test case we could modify and extend. We decided to make an open source test application that has some of the charactersitics of a typical production ADAS application. Since there is a lot of public research into lane detection and warning systems, as well as some bits of free software available to leverage in implementation, we chose to make a simple Lane Departure Warning System (LDWS) application.

Lane Departure Warning System Overview

Overview

LDWS is an OSS application which serves as our test bed for GPGPU tool evaluation. The phase 1 version can be found on the ldws-p1 branch in the LDWS repository. LDWS is a C++ application developed using OpenCV 3 and is under a mix of Apache and MIT licenses. Complete build and use documentation can be found in the project README.

Requirements

The phase 1 requirements are:

- Run on Linux desktop system with Nvidia GPU

- Be written in C/C++

- Leverage both OpenCV and existing FOSS lane detection projects

- Accept video input from Xle

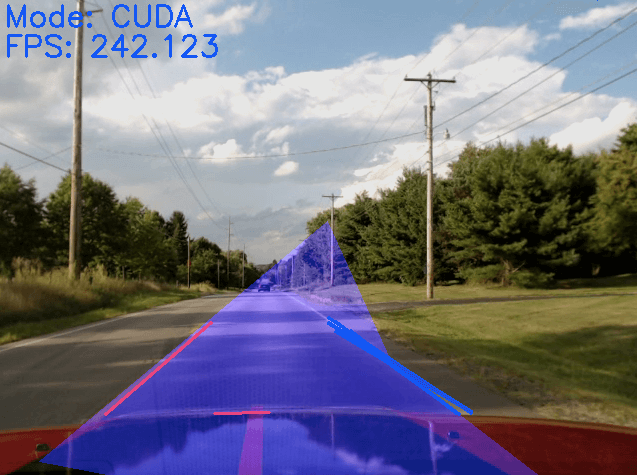

- Detect lanes in video and highlight the current lane

- Detect position in lane and warn if crossing lane marker

- Lane detection and warning need only be good enough quality to demonstrate concept

- Support two different test videos

- Support CPU only and OpenCL offload modes via runtime selection

- Display realtime and average frames per second

Design

LDWS is implemented as a C++ application leveraging cmake for build, OpenCV 3 for image processing, tclap for command line arg processing, and libconfig++ for configuration file processing. It is composed of a main video handling loop, a configuration storage class, and a lane detection class.

On initialization a config store is instantiated which reads all configuration variables from combination of command line options and configuration file options. A file or capture device is opened to provide the video feed to the video handler. Each video frame is then processed using a sequence of algorithms to detect a set of lines present. Using the detected lines, the ProcessLanes() method of the LaneDetector class then determines lane boundaries and draws the detected boundaries on the video for output. The video handler computes frames per second values and draws the processed video frame before fetching another frame of input video.

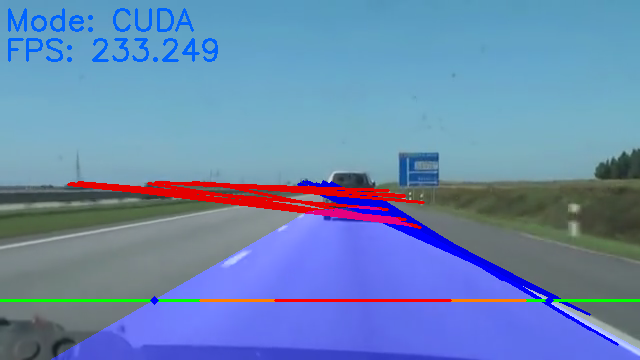

During development it was decided it was better to pull a Phase 2 requirement of CUDA support into Phase 1 so LDWS supports runtime switching (via a command line switch) between CPU-only, OpenCL-offload, and CUDA-offload. LDWS displays the mode as CPU, OpenCL, or CUDA during execution as well as the per-frame FPS value measured. At completion of a video input file, LDWS prints the average FPS maintained during the entire run.

The basic image processing algorithms that LDWS employs are:

- Select a region of interest that excludes portions of the video above the convergence point of the lane markers.

- Color conversion to grayscale to reduce data set

- Gaussian blur to reduce image noise

- Canny edge detector to find all defined edges in the image

- Hough transform line detector to find all lines in the image

LDWS leverages the lane detection algorithm implemented in the opencv-lane-vehicle-track project and the lane departure warning algorithm implemented in the Python-based Lane Departure Warning project. The lane detection algorithm performs a simple angular filtering of lines followed by a selection of the best line based on distance of points from midpoint. The lane departure sensing using a simple line crossing methodology. A horizontal meter line is drawn on the display with the current crossing points of the detect lane marker edges tracked by dots on each end of the meter. If the positioning dots move too far either direction on the meter then a threshold event indicates that the vechicle is moving out of the lane.

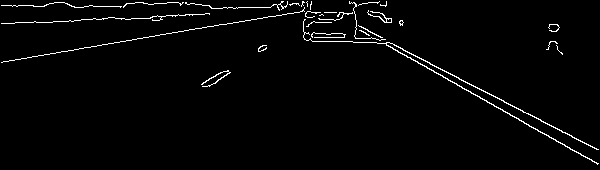

LDWS provides a command line option to enable display of intermediate video frame data during each step of the image processing sequence. These screenshots show the detected edges and lines, respectively, for one frame of video.

Limitations and Improvements

The simple algorithms employed result in only a good enough quality lane detection system. Use of Canny edge and Hough line detection from a vehicle mounted camera perspective is highly susceptible to shadows from trees and other overhead objects on the road as well as being poor in low-light or night conditions. Note the shadows in the following frame on a sunny day.

Notice how the Canny edge detector finds the horizontal edge of the shadows in the region of interest.

The lane detection algorithm itself assumes the vehicle is always at the midpoint on the image which is not the case when changing lanes so the algorithm with vote up lines during lane changes that are not actually lane markers. The result of all of these factors means that the application suffers from losing track of lane markers in all but ideal conditions. The following is an example of it losing sync periodically.

Functional improvements can be made by employing one or more of the following methodologies:

- Inverse Perspective Mapping (IPM) using Homography for “birds-eye view” based line detection.

- RANSAC Spline Fitting to improve identification of lane marker lines

- Utilize color data in lane marker detection

- B-Snake lane model for curved lane detection

Some or all of these approaches are generally combined with the existing basic Canny edge and Hough transform algorithms for a production grade system.

Performance

Background

Describe three modes of operation and limits of tools.

Summary

Explain perf stat CPU utilization and in-app FPS figures.

| Mode | CPU% | FPS |

|---|---|---|

| CPU | 87.2 | 339.3 |

| CUDA | 87.2 | 359.8 |

| OpenCL | 195.4 | 235.0 |

Analysis

The basis of our performance analysis centers around statistical sampling using the Linux perf tool. We start by examining the CPU mode case in the following perf report fragment. The important aspect to note is the top offender in our application with is the call to cv::HoughLinesProbabilistic. That is by far the biggest consumer of CPU cycles in the application. This is completely expected as our lane detection algorithm heavily relies on detecting all lines in each frame of the video feed. You may notice other curious consumers of CPU time in libQt5Gui and libavcodec-ffmpeg.so. These are all products of the display of each resulting frame to the UI and capture/decode of video frames from our source video feed. Since we are only concerned about the portion of our ADAS algorithms that could be offloaded to a GPU, we discard these areas as out-of-scope for this particular project. In a production system, we would avoid using inefficient OpenCV blending/drawing paths and would utilize hardware assisted decode of video frames.

# Overhead Samples Command / Shared Object / Symbol

# ............................ ...................................................................................................................................................

#

99.67% 5551 ldws

32.34% 1441 libopencv_imgproc.so.3.1.0

21.51% 856 [.] cv::HoughLinesProbabilistic

|

--21.48%--_start

__libc_start_main

main

cv::HoughLinesP

cv::HoughLinesProbabilistic

2.40% 252 [.] cv::CvtColorLoop_Invoker<cv::RGB2Gray<unsigned char> >::operator()

|

---__clone

start_thread

cv::ForThread::thread_loop_wrapper

cv::CvtColorLoop_Invoker<cv::RGB2Gray<unsigned char> >::operator()

2.11% 77 [.] cv::FillConvexPoly

|

--2.06%--_start

__libc_start_main

main

28.67% 1048 libQt5Gui.so.5.6.1

2.54% 91 [.] 0x00000000002f3a13

|

---0x7f0b431dfb26

0x7f0b431e3012

0x7f0b431bca13

12.49% 765 libavcodec-ffmpeg.so.56.60.100

no entry >= 2.00%

5.12% 204 libopencv_core.so.3.1.0

4.55% 164 [.] cv::hal::addWeighted8u

|

---_start

__libc_start_main

main

LaneDetector::ProcessLanes

cv::addWeighted

cv::arithm_op

cv::hal::addWeighted8u

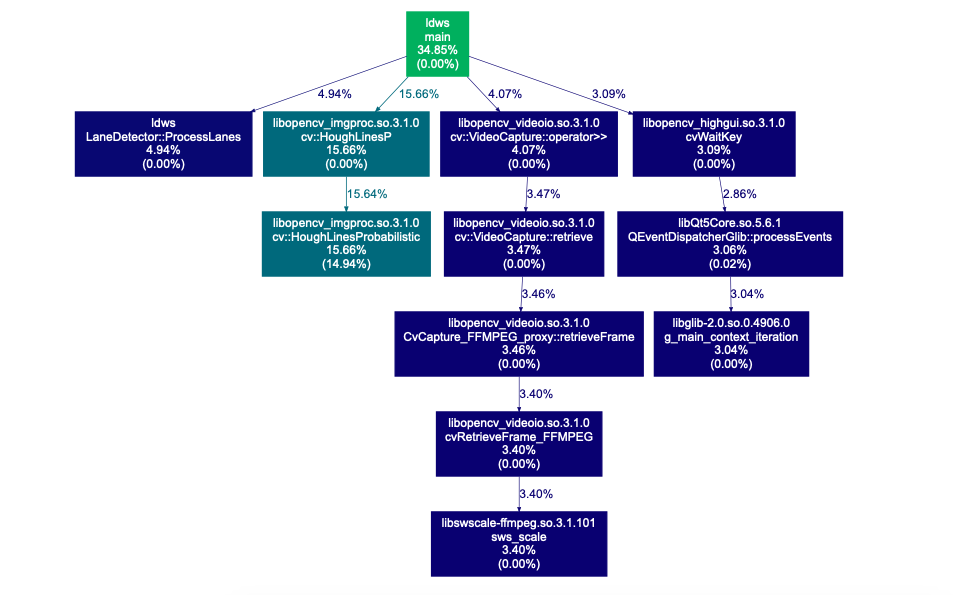

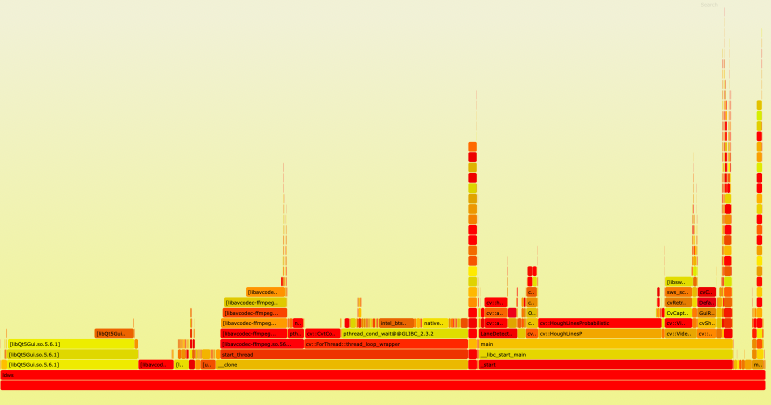

Another way to visualize our CPU usage is in the following Flamegraph. Note that the widest elements in the graph are the hot spots in CPU utilization. If we again discard the video input and UI areas, it can be seen that the cv:HoughLinesProbabilistic() is the top offender. Just to the left, it can be seen that LaneDetector::ProcessLanes() is a close second. We know from our development of the lane detection algorithm that the ProcessLanes() functionality is not going to be offloaded as it is basic post processing of the lines gathered from the Hough transform.

Another way to look at our application is with a traditional callgraph. By zooming in on our area of concern, we get the results seen in the following diagram. Once again, excluding those paths on the right that are out-of-scope, we can clearly see that cv::HoughLinesProbabilistic is a great place to focus on performance improvements.